The Goldfish, The Elephant, and The AI: A User's Guide to the Wild World of Context

Part 1 of the "Beyond the Window" Series

Have you ever tried to explain an inside joke to someone new?

You start with the punchline, which lands with a dull thud. Realizing your mistake, you backtrack. "Okay, wait. So, three weeks ago, Dave was trying to order a coffee, but he accidentally said 'I'll have a large computron,' and the barista, without missing a beat, just asked him if he wanted extra RAM with that."

You look up, expecting laughter. Nothing. The new person is politely smiling, but their eyes are blank. They heard the words, but they missed the context: Dave's chronic sleep-deprivation, the deadpan humor of that specific barista, the fact that you've all been working on a stressful tech project named "Computron." Without that shared history, the joke is just a meaningless string of words.

For years, talking to an AI was like explaining an inside joke to a stranger, over and over again. They had no memory, no shared history, and no idea what a "computron" was. But that's changing at lightning speed. To understand the AI revolution, you don’t need to learn to code; you need to understand Context.

First, Meet the Goldfish: AI's Teeny-Tiny Memory

Not long ago, every Large Language Model (LLM) had the approximate memory of a goldfish—specifically, a goldfish in a very, very small bowl.

This bowl was called the "context window." It was the maximum amount of information the AI could "see" at any one time. This information is measured in "tokens," which are roughly equivalent to words or parts of words. Early models like GPT-2 had a context window of about 1,000 tokens, or around 750 words.

Imagine trying to have a meaningful conversation with someone who could only remember the last two paragraphs you wrote. You’d constantly have to repeat yourself.

You: "My company, Acme Innovations, is launching a new product called the Quantum Widget. It’s designed to optimize synergistic workflows."

AI: "Tell me more about this product."

You: "The Quantum Widget is special because..."

AI: "What company did you say you were from?"

This wasn't because the AI was dumb; it was because its memory "bowl" was so small that the beginning of the conversation had already spilled out by the time you got to the end. It was a brilliant brain trapped in a state of perpetual amnesia.

Then, the Goldfish Evolved into an Elephant

Then, seemingly overnight, the goldfish bowl shattered and was replaced by a palace. A memory palace.

The context window didn't just get bigger; it underwent a ludicrous-speed expansion. Today, models like Anthropic's Claude 3.5 Sonnet boast a 200,000 token window, while Google's Gemini 1.5 Pro offers an astonishing 1 million tokens.

Let’s put that in perspective. 1 million tokens is not a bigger fishbowl. It’s the entire ocean. It's the equivalent of reading The Lord of the Rings trilogy and still having room for The Hobbit.

Suddenly, the AI wasn't a goldfish anymore. It was an elephant.

This changes the nature of the conversation entirely. You can now have a "dinner party" with an AI. You can "feed" it an entire business report, a complex legal contract, or the full transcript of a two-hour meeting, and then start asking questions.

You: "Based on the Q3 financial report I gave you, what were the primary drivers of our revenue dip in the European market?"

AI: "According to page 87, the dip was primarily driven by new regulations in Germany and a supply chain issue with your distributor in France, which was first mentioned in the appendix on page 214."

See the difference? It remembers. It can connect ideas across a vast expanse of information. The AI can finally understand the "inside jokes" because it has access to the entire backstory.

Uh Oh. The Elephant is Getting Brain Fog.

So, problem solved, right? Bigger memory, smarter AI. The end.

Not so fast. It turns out that giving an AI a library doesn't automatically make it a tenured professor. Sometimes, it just gives it more places to lose its car keys.

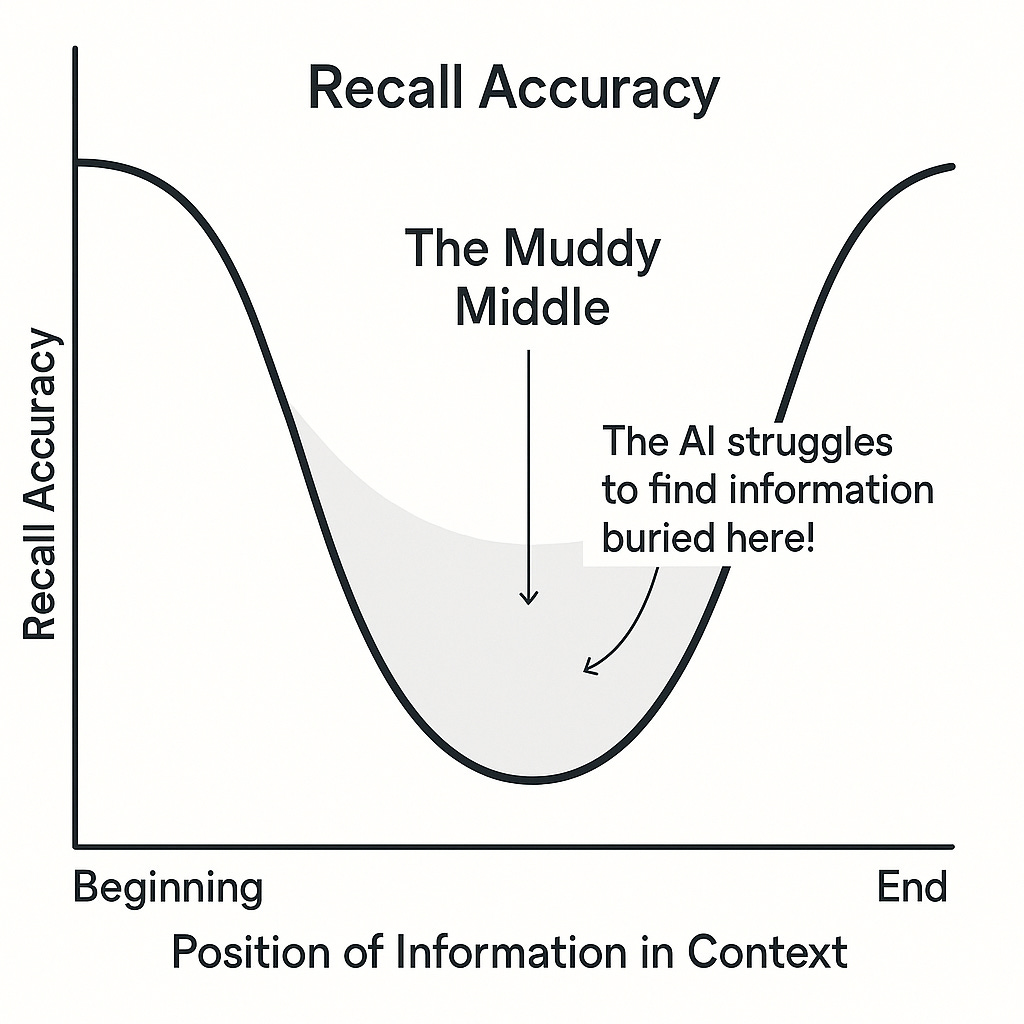

Researchers quickly discovered a bizarre and frustrating paradox: as the context gets extremely long, the AI's performance can start to degrade. This phenomenon is sometimes called "context rot" or, more descriptively, the "muddy middle."

It’s like sitting down with a long-lost relative who insists on showing you their entire 500-page life story in a photo album. The photos at the beginning are fascinating ("Wow, that was your first dog, Buster!"). The photos at the end are clear ("Oh, this was from your trip last week!"). But the photos in the middle? Page 312, detailing a cousin’s graduation party in 1997? Your brain just glazes over. It’s a blur.

LLMs do the same thing. They pay close attention to the beginning and the end of the context you give them, but their focus can wander and get "muddy" in the middle. They can forget that key detail buried on page 312 of your report, even though it's technically right there in their memory. The elephant, for all its capacity, is suffering from brain fog.

The Next Leap: The AI Gets Eyes and Ears

If making the memory bigger isn't a perfect solution, what's next? The answer isn't just about helping the AI remember more; it's about helping it perceive more.

The latest generation of models, like OpenAI's GPT-4o and the Google Gemini family, are multimodal. This is a fancy way of saying that their context is no longer limited to text. They can now see and hear.

This is arguably a bigger leap than the expansion of the context window. The AI is no longer just a disembodied brain you type at. It’s becoming a partner with senses.

You can show it a picture of the contents of your fridge and ask, "What can I make for dinner?"

You can have it watch a video of your golf swing and ask, "Why does my shot keep slicing to the right?"

You can let it listen to a meeting recording and say, "Generate a list of action items and assign them to the correct person."

This is the shift from purely linguistic context (the words on the page) to situational context (what's happening in the world). The AI can now "read the room" in a much more literal way. It's one thing to describe a wilted plant in text; it's another to show the AI a picture of it. This richer, multi-layered context is where the future lies.

From a Goldfish to... Something Else Entirely

So, where does that leave us?

We've watched our AI companions evolve at a dizzying pace. They started as brilliant goldfish, trapped by the tiny bowls of their memory. They grew into mighty elephants, capable of remembering entire libraries, only for us to discover that a massive memory can come with its own form of brain fog. And now, these elephants are growing eyes and ears, learning to perceive the world in ways that were science fiction just a couple of years ago.

The concept of "context" is no longer a simple technical limitation. It is the central stage where the future of artificial intelligence is playing out.

But if a giant, all-perceiving memory isn't the final answer, what is? How do we build an AI that doesn't just have context but truly understands it? How do we get an AI that not only remembers the joke, but knows why it's funny?

Coming up in Part 2: We’ll pull back the curtain on the engineering magic that makes this possible. Get ready to explore the world of "RAG," the "cocktail party effect" in AI, and the clever tricks developers are using to turn these brilliant-but-forgetful elephants into truly intelligent partners.