The Future of UI: From Command Line to Conversational AI

From Commands to Conversations: Designing for Intent in the Age of AI

We are in the midst of massive computing paradigm upgrade and interface transformation happening due to AI. As AI takes over consumer & enterprise use cases, the UI to bring the best of AI has to evolve. In this post, we will try to understand the evolution of interfaces and how product design will shape user experience in the future.

Early User Interfaces: Command Lines to GUI

In the early era of computing, using a computer meant dealing with text-based commands. Through the 1970s, the command-line interface (CLI) – a blank screen for typing instructions – dominated how people interacted with machines. This required users to memorize exact commands and syntax, making early computing accessible only to specialists.

A major shift began in the 1980s with the advent of graphical user interfaces (GUIs). Research at Xerox PARC in the ’70s (e.g. the Xerox Alto in 1973) introduced the idea of visual desktops, icons, and a mouse pointer. Apple’s Lisa (1983) and Macintosh (1984) popularized the GUI, allowing users to interact with windows, menus, and icons instead of typing every command.

By the 1990s, GUIs were mainstream across personal computers (from Microsoft Windows to Mac OS), firmly replacing the command line for most users. This era made computing far more user-friendly – people could now click buttons and manipulate graphics on screen, a revolutionary change from the text-only days.

User-Interface paradigms have shifted over time – from batch processing in early mainframes to command-based interactions (CLI and GUI), and now toward AI-driven “intent-based” interfaces where users specify goals in natural language. (Source: Nielsen Norman Group)

In fact, Apple hired cognitive scientist Don Norman, who coined the term “user experience” in the late ’80s and advocated designing around the user’s needs. By 2000, good UI/UX had proven its business value: an intuitive interface often meant a more successful product.

The 21st Century: Web, Mobile and Multi-Touch Revolution

After 2000, user interfaces underwent continuous innovation driven by the internet and mobile computing. Web applications became richer in the 2000s – technologies like AJAX enabled desktop-like interactivity in the browser, and designers embraced cleaner layouts as screens and bandwidth improved. A monumental shift arrived with smartphones, especially after Apple’s iPhone (2007) introduced multi-touch screens and a novel mobile UI paradigm.

In the late 2000s, a myriad of phone designs (keypads, styluses, flip phones) converged into the now-familiar “slab of glass” touchscreen form factor. Multi-touch gestures (tap, swipe, pinch) became universal UI actions, and mobile apps forced designers to simplify interfaces for small screens. By the 2010s, mobile-first design was the norm – interfaces were crafted for small touchscreens before desktop, reflecting the explosion of smartphone usage.

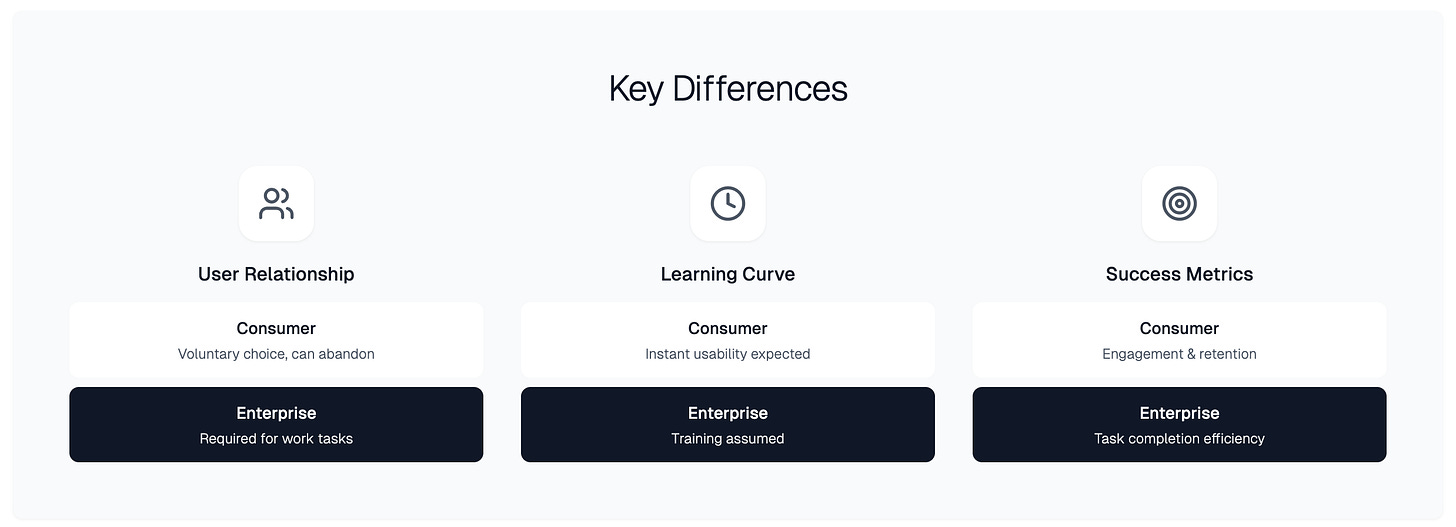

Consumer vs Enterprise Design

In the last decade, the gap between enterprise and consumer UI expectations has narrowed. The “consumerization of IT” trend means that workers now expect their office software to be as user-friendly as the apps they use at home. Modern enterprise tools increasingly borrow design cues from consumer apps with cleaner interfaces, mobile accessibility, and user-centric workflows to boost adoption and productivity.

For instance, Google’s introduction of simple, web-based Google Docs pushed Microsoft to redesign its Office suite for the web and improve usability. Today, enterprise UX teams strive to balance complex power features with simple, guided experiences.

It’s a delicate design challenge: enterprise software must satisfy rigorous business requirements and diverse user roles, but if it isn’t intuitive, employees will find workarounds or resist using it.

In summary, consumer and enterprise UIs have unique considerations (engagement vs. efficiency, broad vs. specialized users), yet both realms are now influencing each other. Good UX design is all about understanding users and reducing friction which has become universally important, whether you’re building the next TikTok or an internal CRM system.

AI Enters the Interface

In the early 2020s, AI capabilities took a dramatic leap forward with generative AI, especially large language models. The release of OpenAI’s ChatGPT (late 2022) was a watershed moment. For the first time, a widely available chatbot could engage in extended, coherent conversations, handle diverse topics, and produce paragraphs of useful text. This kicked off a race to integrate AI “chat” interfaces everywhere. Chat is emerging as a universal UI to access AI’s power – essentially a text box where you can request anything from drafting an email to analyzing data, and get an intelligent response.

Unlike earlier bots confined to narrow tasks, these AI are general problem-solvers, which makes the chat interface incredibly versatile. In response, nearly every major tech platform introduced their own AI chat or assistant:

Search engines like Google and Bing embedded chat modes to answer queries in a conversational way.

Productivity suites added AI copilots (e.g. Microsoft 365 Copilot in Word, Excel, etc.) where you can ask the AI to create content or summarize information right within the app.

Messaging and social apps also jumped in – for example, Snapchat added a GPT-powered chatbot called “My AI” for its users, letting people ask questions or get recommendations inside the chat window.

Programming IDEs adding chat to simplify the coding, debugging and deployment workflows for developers

This trend essentially bolts an AI conversational layer onto all kinds of applications. It’s becoming common to see a little “Ask AI” text box or button in software from note-taking apps to customer support websites.

The chat UI has, in a short time, become a dominant design pattern for AI interactions. In fact, the full-screen chat layout popularized by ChatGPT (a big text area for the conversation, with past dialogues in a sidebar) is now the standard that many others follow. Google’s Gemini, Claude, OpenAI’s own interface, and numerous startups have converged on this familiar chat format – indicating that a common UI paradigm for AI has emerged. For users, this consistency is helpful: if you’ve used one AI chat, you can navigate others easily.

Why is chat becoming so ubiquitous?

One reason is usability and universality. Language is a natural interface, anyone can describe what they want in words. A chat-based UI lets users express a goal (“find trends in this spreadsheet” or “suggest a slogan for my campaign”) without hunting through menus or learning complex software features.

It effectively turns every task into a conversation with an intelligent assistant. Moreover, a conversational UI is flexible: the AI can ask clarifying questions, and the user can refine their request in a back-and-forth loop, much like interacting with a human helper.

This versatility means a single chat interface can potentially front-end countless functions across different domains. It’s important to note, however, that chat isn’t a silver bullet for every interaction.

Visual tasks (like precise design work or data visualization) still benefit from graphical controls and direct manipulation. We’re beginning to see hybrid UIs where chat is the command interface but the output can include modern UI elements – e.g. ask an AI to create a chart and it displays an interactive chart, not just a text description.

In many cases, chat and GUI elements complement each other; for example, an AI might generate a form or a draft document that the user can then tweak using conventional tools. Still, the explosion of chat as a UI in recent years underscores a major shift: interface design is expanding beyond fixed screens and buttons, into fluid, conversational interactions.

Future Outlook: Intent led UI in the age of AI

With AI becoming more widespread and capable, we are likely at the cusp of a new paradigm in user interfaces. Tech experts describe this as moving from today’s “command-based” interaction to “intent-based” interaction.

Instead of telling a computer how to do something through explicit clicks and commands, users will simply tell the computer what they want, and the AI will figure out the execution. Jakob Nielsen (a pioneer in usability) calls AI-driven interfaces the first new UI paradigm in decades, noting that it reverses the locus of control: you specify the desired outcome, and the system handles the steps. In practical terms, this could mean no longer juggling dozens of apps and interfaces to get things done.

AI Agents could act as a unified layer over our digital lives. For example, rather than manually opening your calendar app, email, airline website, and maps to plan a business trip, you might just tell an AI assistant, “I need to be in Dubai next Monday, arrange the trip.” The AI would then coordinate across services – book flights, schedule meetings, reserve a hotel, add events to your calendar – and present you with the plan. Some early experiments already hint at this cross-app capability. Industry leaders predict that soon “users will interact with AI agents that understand context and can seamlessly perform tasks across multiple domains,” freeing us from hopping between siloed apps. We at Yellow.ai are enabling businesses to be able to build out such ai agents which take actions on behalf of the user with a next generation intent based UI.

In fact, apps may increasingly become behind-the-scenes providers, with the AI agent as the main face the user interacts with. Microsoft’s Windows and Office platforms, for instance, are integrating system-wide copilots that listen for user intentions (via chat or voice) and then invoke the appropriate app features automatically.

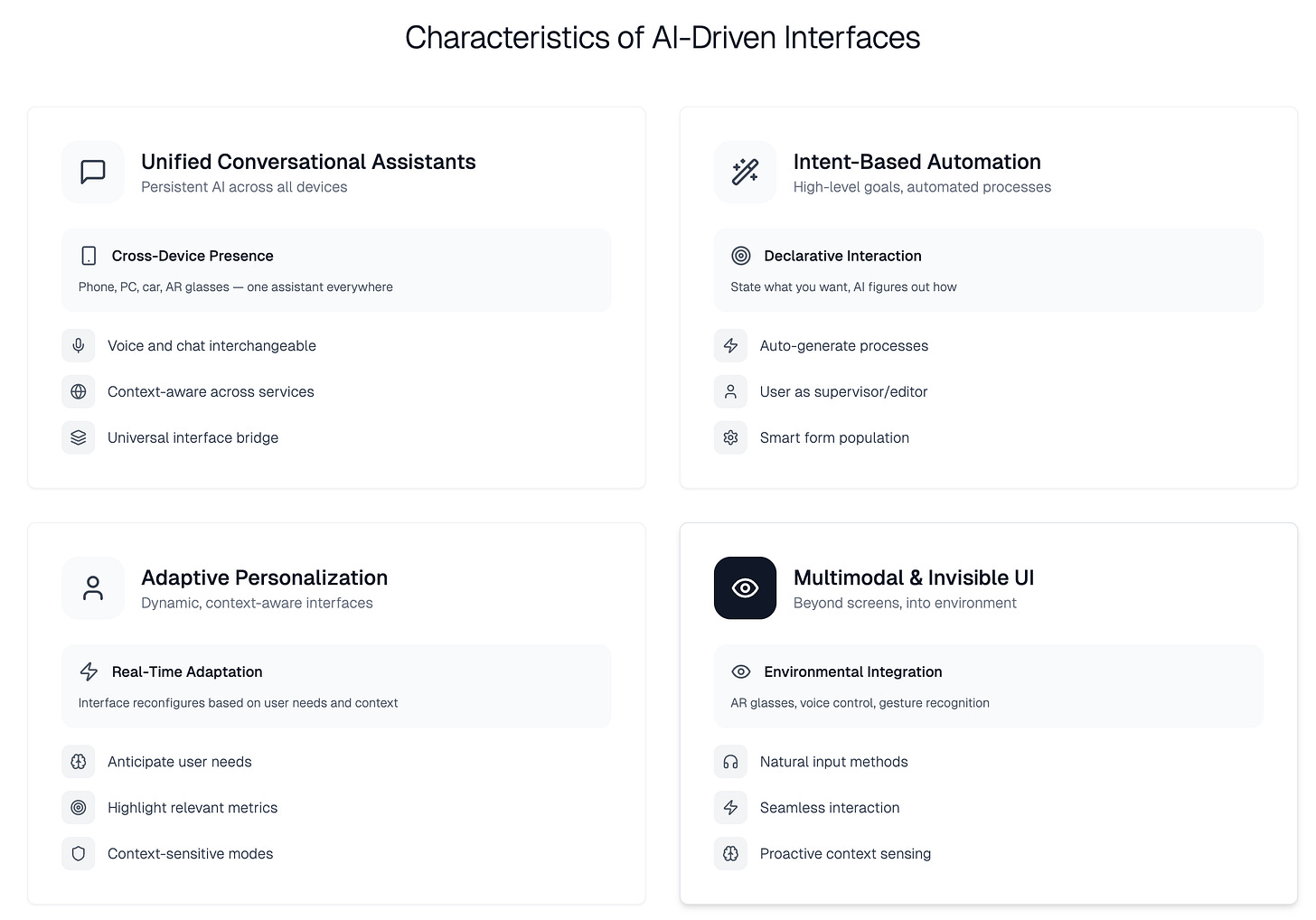

What does this mean for the look and feel of UIs? We can expect interfaces to become more intent driven, conversational, personalized, and invisible. A few high-level changes on the horizon include:

Unified Conversational Assistants: A persistent AI assistant in our devices (phone, PC, car, AR glasses) could become the primary way we initiate tasks. This assistant would have awareness of your context (calendar, preferences, location) to handle requests intelligently. As Qualcomm’s CEO remarked in 2025, “once the AI understands your intention… it’s going to be this assistant that you have with you all the time,” operating across apps and devices. Voice and chat will be interchangeable inputs for this assistant, depending on what’s convenient at the moment. In effect, the AI becomes a universal interface bridging many services.

Intent-Based UI and Automation: The traditional GUI isn’t going away, but AI will change how it’s used. We’ll see more UIs where users set high-level goals and the system auto-generates the process. Already, AI can generate working UI code or prototypes from descriptions, hinting at generative UI design tools. In complex enterprise software, users might rely on AI to populate forms, run analyses, or find the right configuration, instead of digging through menus. This shifts the user’s role to more of a supervisor or editor: you state what you want, review the AI’s output, and adjust as needed. It’s a more declarative interaction style (state the intent) versus the imperative clicking of every step.

Adaptive and Personalized Interfaces: With AI, interfaces can become far more dynamic and tailored to the user’s needs in the moment. We already see simple versions of this (e.g. smartphone home screens suggesting apps or actions based on routine, websites adjusting content to user behavior). In the future, an AI-powered UI could reconfigure itself on the fly. Imagine an enterprise dashboard that learns which metrics you care about and rearranges to highlight those, or a news app that automatically switches to a calmer, text-only mode if it senses you’re driving (via voice output). AI will let interfaces anticipate user needs – maybe your AI assistant pre-fills a meeting summary template because it knows you have a debrief call coming up – reducing the amount of manual interaction needed. This kind of personalization could improve productivity and accessibility, as the interface molds itself to the user rather than one-size-fits-all.

Multimodal and “Invisible” UI: Future UIs will likely span beyond screens into our environment. Augmented Reality (AR) glasses, for instance, could overlay information guided by an AI that understands what you’re looking at. The Qualcomm vision suggests AR glasses acting as “wearable AI,” constantly ready to provide context or assistance in your field of view. In cars, voice-controlled AI with heads-up displays might handle everything from navigation to communications so that drivers can keep their eyes on the road. The aim is that interacting with technology feels seamless – the interface disappears into daily life. This echoes the design mantra that “the best interface is no interface,” wherein the technology works in the background with minimal explicit fiddling. AI’s ability to interpret natural inputs (speech, vision, gestures) means we won’t always need a traditional GUI to mediate our actions – talking or even just automated context sensing could trigger what we need. Of course, there will still be screens and visuals, but they might manifest only when useful. For example, a smart home system might simply act on your spoken request to adjust lighting, without any app UI, or proactively adjust it based on learned preferences without being asked at all.

New UX Challenges and Opportunities: As UI shifts to AI-driven interactions, designers and developers face new challenges. Ensuring usability and trust in an AI interface is paramount. Current chatbots, for instance, can sometimes give incorrect answers or behave unpredictably, which is a UX issue in itself. Jakob Nielsen points out that today’s AI tools have “deep-rooted usability problems” – they require carefully crafted prompts and even “prompt engineers” to get the best results. Future UIs must abstract that complexity away so that anyone can get value without specialized skills (just as early web search engines evolved to understand natural queries, eliminating the need for Boolean logic expertise). Transparency will be key: UIs might need to show confidence levels or explain AI actions (“I booked this flight because it’s the cheapest and aligns with your schedule”) to build user confidence. Additionally, there’s a risk that some users (perhaps those less articulate or tech-savvy) could be left behind if interfaces go too far toward all-chat or all-voice. Designers will likely incorporate guidance, suggestions, and fallback options – for example, providing example prompts or GUI shortcuts for common actions – to make AI UIs approachable. On the flip side, AI can enhance UX research and design itself; generative AI can rapidly prototype designs, simulate user interactions, or personalize experiences at scale, which means product teams can iterate faster and perhaps even involve end-users in co-creating their ideal interface.

In conclusion, the UI is no longer just the series of screens and buttons we present to the user, it’s becoming an ongoing conversation and partnership between the user and an intelligent system.

The history of UI shows constant progression toward more natural and empowering interactions: from command lines, to visual desktops, to touch and mobile, and now to AI-driven conversations. Each step removed barriers between humans and technology, and the AI-infused future promises to continue that trajectory.

Chat-based and AI-assisted interfaces are rapidly emerging as a kind of universal access point to computing capabilities, whether in consumer apps or enterprise workflows. The future of UI design will be about refining this partnership – making sure the AI understands the user’s intent and the user trusts the AI’s assistance.

It’s an exciting time where decades of UI/UX principles will mix with cutting-edge AI to define how we all get things done. The ultimate goal remains what it has always been: to make technology an invisible ally that amplifies what people can achieve, with interfaces that adapt to us (not the other way around) in an increasingly intelligent way.

Thanks to Apoorva and Amropalli for reading and providing feedback on the article.

What a journey it has been. Found the "ally" aspect you're highlighting particularly fascinating. The fact that we're witnessing this unprecedented mutual discovery process where AI systems are learning to interpret not just our words, but our intentions, context, and even the gaps in what we're trying to express. How we're moving toward interfaces that meet us where we naturally think and communicate, rather than forcing us to translate our thoughts into machine logic.

The exciting part is that it's almost like we're co-evolving new forms of augmented thinking. As the friction of the interface increasingly shrinks, AI is decoding human intent while we are unlocking new dimensions of thinking.